Introduction

Recently I had to set up a few servers to be used for a k3s cluster.

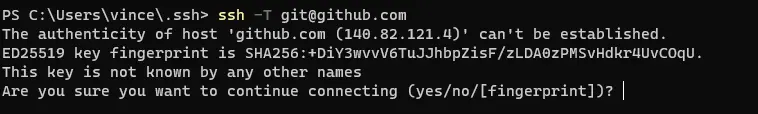

While I was setting them up I was reminded of the “TOFU” (Trust-on-first-use) flow that most people use when connecting to ssh servers for the first time.

TLDR: when you connect to a ssh server for the first time you are presented with a identifier of the server’s public key. You are prompted to verify that this key is the expected one and really comes from the server you are trying to connect to. With TOFU, you simply accept this public key which is then stored locally for any future connections.

This looks like the below:

While it’s unlikely that a connection gets hijacked and a fake server is being served, unlikely does not mean impossible, thus a fix is needed :P, enter OpenSSH CA Signed Host Keys.

A longer explanation, written by the AI Overlords, can be found here.

OpenSSH CA Signed Host Keys

In SSH, there are the standard flows of passwords and public/private keypairs for authentication. What less people know, is that you can also use the Certificate Authority mechanism to build on top of the public/private keypair flow.

This CA mechanism would solve our TOFO problem, since instead of blindly trusting the first server we connect to, we can rely on the CA as a trusted third party, just like the public web works with HTTPS. Additionally this trusted third party would actually be ourselves if we own and manage the CA private key.

So how does it work in practical terms?

First off we need to generate a keypair for our Certificate Authority:

# on local system

ssh-keygen -t ed25519 -f HostKeyCA -C HostKeyCA

# proceed to upload the HostKeyCA to the server, for example to /etc/ssh/HostKeyCA

Next, we need to sign the server’s host keypairs with this CA keypair:

# assuming this is the DNS name for your server

export FQDN="server1.example.com"

# sign the rsa key pair, making this key work for several principals (-I flag) for a time period of roughly 10 years

ssh-keygen -s /etc/ssh/HostKeyCA -I "$(hostname)" -n "$(hostname -I|tr ' ' ',')$(hostname),${fqdn}" -V -5m:+520w -h /etc/ssh/ssh_host_rsa_key.pub

# proceed to do the same for the other host keys

ssh-keygen -s /etc/ssh/HostKeyCA -I "$(hostname)" -n "$(hostname -I|tr ' ' ',')$(hostname),${fqdn}" -V -5m:+520w -h /etc/ssh/ssh_host_ed25519_key.pub

ssh-keygen -s /etc/ssh/HostKeyCA -I "$(hostname)" -n "$(hostname -I|tr ' ' ',')$(hostname),${fqdn}" -V -5m:+520w -h /etc/ssh/ssh_host_ecdsa_key.pub

# remove the uploaded CA key

rm /etc/ssh/HostKeyCA

Afterwards, we need to tell the ssh service to use the public key of HostKeyCA as a cert-authority and configure the signed host certificates in the sshd_config (generated by the previous commands).

echo "@cert-authority * $(cat /etc/ssh/HostCA.pub)" >> /etc/ssh/ssh_known_hosts

for i in /etc/ssh/ssh_host*_key-cert.pub; do echo "HostCertificate $i" >>/etc/ssh/sshd_config; done

And finally, we should reload the ssh service to apply the changes.

systemctl reload sshd

Now on the local system, we can tell our ssh client to trust this certificate authority using:

echo "@cert-authority * $(cat HostCA.pub)" >> ~/.ssh/known_hosts

Problems with manual approach

In the above steps, I outlined the basic steps to configure a OpenSSH Host Keys using a Certificate Authority, however there are some glaring flaws:

- The CA keypair needs to be uploaded to the server by a system administrator

- this implies you connecting to the server … thus you would still do TOFU …

- It’s a hassle to do the run the commands manually and one could easily lock themselves out, if done incorrectly

In essence: if we do it manually, we’re still stuck with TOFU.

Terraform + cloud-init to the rescue

There is a solution by using two Infrastructure-as-Code tools, namely terraform and cloud-init.

Using terraform, we can create servers automatically + generate and manage a CA keypair. Additionally most cloud providers, if not all, allow you to pass a cloud-init file to the server resource, which allows us to configure the server as needed on first boot.

In my case I’m using Hetzner Cloud, so to provision the hetzner cloud servers in Terraform I have the following snippet:

resource "hcloud_server" "k3s_server" {

count = local.k3s_server_count

name = "server${count.index}.k3s.${terraform.workspace}.hc.vincentbockaert.xyz"

server_type = "cax11"

image = "debian-12"

datacenter = "nbg1-dc3"

user_data = data.template_cloudinit_config.k3s_server[count.index].rendered

labels = {

"Environment" = terraform.workspace

"Arch" = "ARM64"

"NodeNumber" = count.index

"k3s" = "server"

}

ssh_keys = [

hcloud_ssh_key.this.id

]

public_net {

ipv4_enabled = true # really wish i didnt need this ... but holy f there are too many services IPv4 only and I dont want to a NAT64 service

ipv6_enabled = true

}

}

resource "hcloud_ssh_key" "this" {

name = "default"

public_key = var.defaultSSHPublicKey

}

In the above code, two server resources are created with the following:

- Passing in a template-rendered cloud-init config

- Some labels (which I use in other code)

- A default ssh key usable to authenticate as the root user

- This is disabled via cloud-init, instead a sudo-user is created

- Configuring public addresses

(Technically I’m creating multiple of these resouces using the count method, but let’s ignore that as it’s not relevant.)

As mentioned a rendered cloud-init configuration is passed, so let’s create this file and configure it to make a sudo-user, assigning the ssh public key (which we get through the Terraform template renderer) and disable the root user.

#cloud-config

users:

- name: ${username}

sudo: ALL=(ALL) NOPASSWD:ALL

groups: users, sudo

home: /home/${username}

shell: /bin/bash

lock_passwd: true

ssh_authorized_keys:

- ${defaultSSHPublicKey}

ssh_pwauth: false

# disable root over ssh

disable_root: true

Now the root user is disabled but we can still use a sudo-powered user, we can configure the CA Signed Host Keys:

fqdn: ${fqdn}

prefer_fqdn_over_hostname: true

# SSH CA Certificates set up

# upload the ca private key and public key to the server

write_files:

- path: /etc/ssh/HostCA

encoding: b64

owner: root:root

permissions: '0600'

content: ${HostCAPrivateKey}

- path: /etc/ssh/HostCA.pub

owner: root:root

permissions: '0644'

content: ${HostCAPublicKey}

# ssh host key signing with ca certificates

runcmd:

# sign and configure ssh host keys

- ssh-keygen -s /etc/ssh/HostCA -I "$(hostname)" -n "$(hostname -I|tr ' ' ',')$(hostname),${fqdn}" -V -5m:+520w -h /etc/ssh/ssh_host_rsa_key.pub

- ssh-keygen -s /etc/ssh/HostCA -I "$(hostname)" -n "$(hostname -I|tr ' ' ',')$(hostname),${fqdn}" -V -5m:+520w -h /etc/ssh/ssh_host_ed25519_key.pub

- ssh-keygen -s /etc/ssh/HostCA -I "$(hostname)" -n "$(hostname -I|tr ' ' ',')$(hostname),${fqdn}" -V -5m:+520w -h /etc/ssh/ssh_host_ecdsa_key.pub

# configure ca usage on the server

- echo "@cert-authority * $(cat /etc/ssh/HostCA.pub)" >> /etc/ssh/ssh_known_hosts

# configure new signed host certificates

- for i in /etc/ssh/ssh_host*_key-cert.pub; do echo "HostCertificate $i" >>/etc/ssh/sshd_config; done

# remove the ca private key

- rm -f /etc/ssh/HostCA

# reload sshd to apply changes

- systemctl reload sshd

In essence we are doing the same thing as the manual steps with the difference being that this is run on first boot, by the server itself, thus we never touch the server ourselves and never have to go through the TOFU flow.

The only downside is that we still have to upload the CA private key to do the actual signing and thus temporarily expose it on the naked server, until we remove it in the final cloud-init step.

Now, that we’ve written the cloud-init.yaml file we need can think about the terraform code required to render it correctly and pass it along to the servers we create.

For this we can use data resources in the form of template_cloudinit_config combined with a template_file resource. The template resource allows one to use variable substition for rendering the cloud-init.

For example:

data "template_cloudinit_config" "k3s_agent" {

count = local.k3s_agent_count

part {

filename = "node-init.yml"

content_type = "text/cloud-config"

content = data.template_file.k3s_agent[count.index].rendered

}

gzip = true

base64_encode = true

}

data "template_file" "k3s_agent" {

count = local.k3s_agent_count

template = file("cloud-init.yml")

vars = {

username = var.node_ssh_username

defaultSSHPublicKey = hcloud_ssh_key.this.public_key

HostCAPrivateKey = base64encode(tls_private_key.ca_host_key.private_key_openssh)

HostCAPublicKey = tls_private_key.ca_host_key.public_key_openssh

fqdn = "agent${count.index}.k3s.${terraform.workspace}.hc.vincentbockaert.xyz"

}

}

data "template_cloudinit_config" "k3s_server" {

count = local.k3s_server_count

part {

filename = "node-init.yml"

content_type = "text/cloud-config"

content = data.template_file.k3s_server[count.index].rendered

}

gzip = true

base64_encode = true

}

data "template_file" "k3s_server" {

count = local.k3s_server_count

template = file("cloud-init.yml")

vars = {

username = var.node_ssh_username

defaultSSHPublicKey = hcloud_ssh_key.this.public_key

HostCAPrivateKey = base64encode(tls_private_key.ca_host_key.private_key_openssh)

HostCAPublicKey = tls_private_key.ca_host_key.public_key_openssh

fqdn = "server${count.index}.k3s.${terraform.workspace}.hc.vincentbockaert.xyz"

}

}

And finally … we can’t forget to actually create the CA keypair that is used on the server and passed along to cloud-init, via the template resources.

resource "tls_private_key" "ca_host_key" {

algorithm = "ED25519" # most modern, RSA and ECDSA can also be used

}

resource "tls_private_key" "ca_user_key" {

algorithm = "ED25519" # most modern, RSA and ECDSA can also be used

}

Sadly … we still have final manual step for the client / local systems where the ssh client need to be set to trust this certificate authority. For this, the terraform output as seen below will generate a nice command, simply copy-paste the output into a terminal.

output "ca_host_key_command_to_use_in_client" {

value = "To make use of the Host Key CA in your ssh client, use the command: echo '@cert-authority * ${tls_private_key.ca_host_key.public_key_openssh}' >> ~/.ssh/known_hosts"

}